Let’s start off on a light note. A long time ago when computers were still new (yes, it was that long ago), when I was at my first academic assignment, the head of the division dealing with computers gave a talk on artificial intelligence for computers. One of the humanities faculty in the audience put a question after the talk “Would you want your daughter to marry one (i.e. a computer)?“. Legend has it (I wasn’t there) that he answered “Yes, if she loved him.” Another version of this legend has it that someone shouted out after the question, “Why not—his wife did.”

A necessary condition for computers or robots to have a soul is that they be self-aware, be conscious. If this is not possible, then there would be no way we could think that devices with “artificial intelligence” had souls. So, in this section we’ll focus on whether computers and robots can be self-aware. Another way of talking about this is to ask whether “Artificial Intelligence” (AI) is in fact possible, that is to say, whether computers/robots are intelligent—can think independently, outside a set of prescribed rules, algorithms. We’ll examine below the different answers to this question given by AI experts and philosophers.

Turing's Argument for Computer Souls

Turing Test for Computer Intelligence: observer asks questions of human (behind screen) and computer (behind screen). If questioner can’t tell from the answers which is the computer and which the human, the computer has passed a test for intelligence.

Animation from Wikimedia Commons.

Turing Test for Computer Intelligence: observer asks questions of human (behind screen) and computer (behind screen). If questioner can’t tell from the answers which is the computer and which the human, the computer has passed a test for intelligence.

Animation from Wikimedia Commons.

Theological Objection: ‘Thinking is a function of man’s immortal soul. God has given an immortal soul to every man and woman, but not to any other animal or to machines. Hence no animal or machine can think.’

Rebuttal to Objection: ‘It appears to me that (The Theological Objection) implies a serious restriction of the omnipotence of the Almighty. It is admitted that there are certain things that He cannot do such as making one equal two, but should we not believe that He has freedom to confer a soul on an elephant if He sees fit? We might expect that He would only exercise this power in conjunction with a mutation which provided the elephant with an appropriately improved brain to minister to the needs of this soul.’

- Alan Turing, Computing Machinery and Intelligence

Alan Turing was a founder of computer theory, how they work and what they can do. During World War II he led a group of English cryptographers to solve the German “Enigma Code.” His life, an unhappy one, has been the subject of books and plays.

He proposed a test to see if one could say computers were intelligent (see the animation above). His “Turing Test” is totally behavioristic: you have two input tables; behind one is a person, behind the other a computer. You ask questions at each table and on the basis of the answers decide behind which desk is the computer; if you can’t tell the difference, then by objective evidence the computer can think as well as a human can.

A number of objections have been raised to the Turing test as a measure of self-awareness. The best of these, I believe, is John Searle’s “Chinese Room” analogy. Imagine you are in a large room containing many, many manuscripts and directions. A question in Chinese 你 喜 欢 咖啡 吗? (“Do you like Coffee?”) comes into the room and you go to the directions for answering that question. The instruction book lists the Chinese character you are to type out for this question: 是 ( “Yes”). You don’t know Chinese, you don’t understand the characters, but the question is answered correctly. There is clearly no understanding of Chinese as a language, nor is the system (you and the room) conscious or self-aware. There have been arguments about the validity of this analogy (see here), but I believe Searle’s reasoning is valid. He makes the important distinction between grammatical, algorithmic reasoning—proceeding according to a set of rules—and semantics, intelligence—understanding the meaning of rules and terms by association.

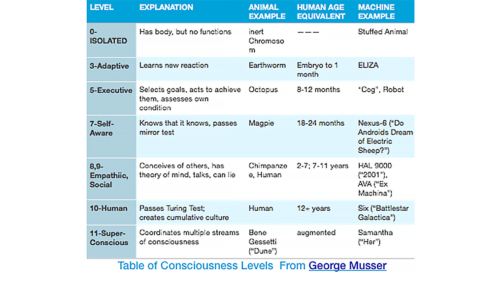

Degrees of Self-Awareness, Intelligence

Since there are degrees of self-awareness, consciousness, and intelligence in living things, it follows that if computers can be self-aware, we might expect there to be degrees of consciousness and intelligence in machines generally. Such degrees of consciousness/intelligence have been nicely categorized by George Musser in an article in AEON. His scheme is summarized in the table at the right (Musser gives 13 degrees; I haven’t listed all of them):

Note that the last four machine (computer) examples are from science fiction stories, as is the last “animal” example. Note also—no notion of God as the ultimate super, super-consciousness.

Eliza, A Computer Therapist

Here’s a Turing Test example: a computer program devised by Joseph Weizenbaum in the 1960’s (MIT Artificial Intelligence Laboratory) to mimic a psycho-therapist.

ELIZA: HELLO. I AM THE DOCTOR. PLEASE TELL ME ABOUT YOUR PROBLEM. (Type in complete sentences and always end your sentence with a period.)

patient: My mother makes me so mad sometimes.

ELIZA: CAN YOU GIVE ME AN EXAMPLE?

patient: She phoned me and expected me to drop everything.

ELIZA: TELL ME ABOUT YOUR FAMILY.

patient: I hate my parents.

ELIZA: WHY DO YOU HATE YOUR PARENTS?

patient: They won’t let me go.

ELIZA: IS IT IMPORTANT THAT THEY WON’T LET YOU GO?

patient: I’m suffocating.

….etc.”

People who have engaged this program report that they seem to have encountered a real therapist—they talk about things they would not bring up except to a therapist. So this program would probably pass a Turing test. But it’s clear (I hope) that there’s no self-awareness in the computer as the program proceeds. And whatever intelligence is manifested in the response is that of the programmer, anticipating and correlating stock phrases.

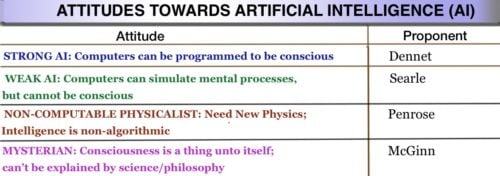

Philosophers Differ on Computer Consciousness

Now certainly one has to answer the question “Can a computer be self-aware?” before answering “Could a computer have a soul?”. AI experts and philosophers of mind (Dennett, Churchland) say yes to the first and refuse to discuss the second. Philosophers of mind (Searle, Penrose, McGinn) who say no to the first question would, of course, say no to the second. The division of opinion on whether computers can think/can be conscious is summarized in the following table, proposed by Roger Penrose:

Daniel Dennett is an American philosopher who believes that consciousness is an illusion, that the only thing occurring is the electrical and biochemical action of neurons—that the brain functions very much as a “meat computer.” Dennett is a good example of the “mind emerges from brain” school of philosophers. Others in this school are Paul and Patricia Churchland and David Chalmers. Chalmers believes that there might be something in addition to physiology contributing to the workings of the mind (although he wouldn’t say this something is the Holy Spirit). He struggles with what that something is. See this panel discussion in which Chalmers posits that there’s a 42% chance that we live in a computer simulation (shades of The Matrix!) and, in particular, see time 1:38 for the odds the panelists give of that being the case.

John Searle is an American philosopher of the mind who believes that consciousness is an “epiphenomenon” of biochemical and biophysical brain processes, very much like surface tension—wetness—is an epiphenomenon of the molecular structure of water. However Searle does not believe that consciousness can be a result of a computer-like mechanism (see the “Chinese Room” analogy above). Searle says that consciousness is a physiological property like digestion (see this 2014 interview).

Roger Penrose is a British mathematician and physicist who believes that consciousness is directly linked to quantum processes, and that until a satisfactory theory of quantum gravity emerges, there can be no truly complete theory of consciousness. Nevertheless, he and Stuart Hameroff, an anesthesiologist and physiologist, have proposed that quantum effects in microtubules in neurons give rise to consciousness. In his books, The Emperor’s New Mind, Shadows of the Mind, Consciousness and the Universe, Penrose argues strongly that consciousness is not an algorithmic process. He uses Goedel’s Incompleteness Theorem and the Turing Halting Theorem to show that an algorithmic process can not generate theorems in number theory that a human could. There have been many objections to this argument (search: “Penrose Turing Halting Theorem”), so his reasoning has not convinced those in AI community that they are wrong in believing that a computer can be conscious or that the brain is a “meat computer.”

Colin McGinn is one of the new “Mysterians”, philosophers who believe that consciousness is a phenomenon that can never be understood scientifically because we are limited in our understanding. He follows Chomsky and Nagel in the notion that there are things we cannot experience or “know” in terms of consciousness—if we’re color blind, we can never know what seeing “red” is like, even though we know all there is to know about the neurons affected, the wavelength of the light that excites the red sensation etc. As in Thomas Nagel’s ground-breaking article “What’s it like to be a bat,” we can never have the same sensations as a bat and know what it’s like to perceive by supersonic echoes.

Conclusion

So, what’s the verdict? It seems the jury is hung. No argument presented has been strong enough to convince the others. My own judgment is inclined to that of the New Mysterians. It is a view that is compatible with religious belief, and the belief that at the top of a conscious scale is the consciousness of the Trinitarian God, in which all of Plato’s and St. Augustine’s ideal forms reside.

***This article is section two of Dr. Robert Kurland’s essay, “Can Computers Have a Soul?” from his e-book, Truth Cannot Contradict Truth. For the complete essay, click here.