This is the third in a series of articles on the nature of time and the timelessness of God. Links to Parts I and II can be found at the bottom of the page.

In this post I’ll talk about entropy; how change from order to disorder is measured as an increase in entropy; and thus, how entropy is pictured as “the arrow of time.”

But first, let’s look into thermodynamics, because that’s where the concept of entropy originated.

A brief, brief look at thermodynamics

Rather than discussing entropy by means of definitions and formulae, I’ll set it in its natural context: thermodynamics. The First Law of Thermodynamics deals with energy(1)—how energy changes form and how you can’t get something from nothing. The Second Law of Thermodynamics deals with entropy—what entropy tells us about the conversion of heat to work and order to disorder.

As Einstein said, these two laws are the most fundamental in all of science:

“A theory is the more impressive the greater the simplicity of its premises is, the more different kinds of things it relates, and the more extended is its area of applicability. Therefore the deep impression which classical thermodynamics made upon me. It is the only physical theory of universal content concerning which I am convinced that within the framework of the applicability of its basic concepts, it will never be overthrown.” Albert Einstein, as quoted in “Albert Einstein: Historical and Cultural Perspective”

The rules of thermodynamics operate in all science, from cosmology to molecular biology to information theory. Space limitations will not allow me to tell you here, even at the “Thermo for Dummies” level, all you might want to know about thermodynamics. For the interested reader, there are quite a few online videos that do a good job of explaining thermodynamics. (See References, below.) In this discussion I’ll try to be simple and salient.

The First Law of Thermodynamics: conservation of energy

In the 19th Century, engines that convert heat to work were the cornerstone of the Industrial Revolution. Science followed economics in order to understand how these heat engines worked, and thus was thermodynamics born. The first development was the realization that heat was a form of energy and could be converted to useful work.1 The First Law of Thermodynamics has to do with the conservation of energy, as when heat is changed to work in a steam engine. A perpetual motion machine of the first kind is impossible: you can’t get out more energy than you put in.

Systems that have different energy states available will go to the lowest in potential energy, in general (2), unless entropy intervenes, as we’ll see below. The difference in energy from high to low is given out by the system as some other form of energy: work, heat, radiation, electrical, chemical.

The Second Law of Thermodynamics: entropy and heat flow

Rudolf Clausius noticed something very important about heat: it flows spontaneously from a high temperature to a lower temperature. When you drop an ice cube into a cup of hot coffee, heat will flow from the hot liquid to the cold ice cube and melt it. The greater the difference in temperature, the faster the heat flows from hot to cold.

So, here's how Clausius might have thought about this: "Ach, so! (heavy German accent here, please!). Vat can I write that vill have heat and temperature in it? Let's call this new function "entropy" from the Greek 'εν τροπε, 'in trope' or 'in change' or 'transformation.' And l'll denote it by the letter S." (Why S? I don't know.) "So, if we have a little bit of heat, and a high temperature, the transformation would be small, so let's say that a little bit of S equals a little bit of heat transferred divided by temperature." Actually, Clausius used arguments from calculus to arrive at his definition. See the 1867 English translation of the work in which he defined entropy. (3)

Entropy measures disorder

How does entropy change when you go from order to disorder? Let’s begin this inquiry by thinking about what “order” means.

Entropy, heat transfer and information

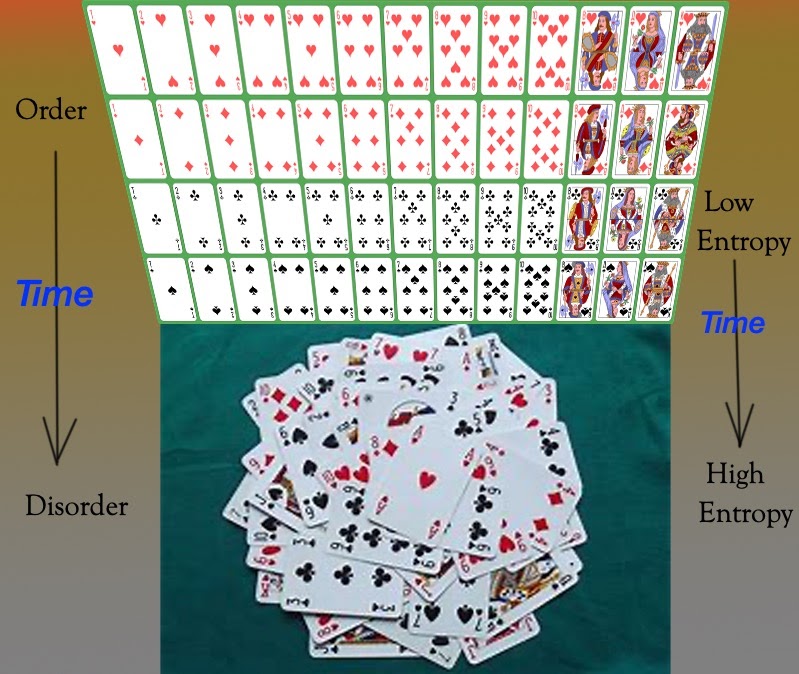

If you look at the above image, the top shows playing cards arranged in order. If, after you had looked at the array, someone asked you to put your finger on a card (while blindfolded), and then told you which row and how far over you were, you’d be able to tell him/her which card you had picked (for example, bottom row, 10th over from the left, would be the ten of spades). In other words, you know where a given card is at a given location; you have full information about the system.

The bottom picture, as if the top array had been knocked down and mixed, is one of disorder. You would not likely be able to tell what card you had put your finger on (while blindfolded) even after you were told where your finger was. Disorder is a lack of knowledge; you’re missing information about the system.

Now where’s the connection between entropy and order/disorder? Recall that a change in entropy occurs when heat is put into a system3. What sorts of things happen when you transfer heat to something? Well, when you heat ice it becomes liquid water. When you heat liquid water it becomes steam, water as a gas. Generally when you transfer heat to a system, the molecules in it get more energy, bounce around more and become less localized.

It should be clear that you know less about the positions of water molecules in liquid water than when they’re localized in definite positions in an ice crystal. Similarly, you know less about positions of water molecules when they’re roaming all over the room as steam than when they’re localized in a tea kettle. (4)

Moreover, an increase in entropy can occur even if there is no heat transfer or negligible heat transfer (as in the following example). Let’s suppose you take a glass of water and put a teaspoonful of lemon flavoring into it. The entropy will increase: before you put the lemon flavoring into the water it was localized in the teaspoon; afterwards it’s dispersed through the whole glass. The molecules constituting the lemon flavoring have much more room to roam around and you have less knowledge of where a particular molecule might be. This sort of entropy change is termed “entropy of mixing.”

Spontaneous changes, reversibility, irreversibility

The example above, dropping lemon flavoring into a glass of water, illustrates an “irreversible process” or spontaneous change. Other examples are scrambling an egg, dropping a plate on the floor and breaking it, popping a balloon. They are changes such that work would have to be done, energy would have to be expended to restore a system to the state it had at the beginning of the process.

Given this picture of irreversible processes, what is a “reversible process”? What the name says—you can go either way, from beginning to end or end to beginning, at every stage of the process, with just a tiny push in the right direction. Here’s a simple example: you have a billiard ball rolling slowly on a flat billiard table; you can give it a little push to get it moving in the opposite direction. Here’s another example: you have liquid water at 100% humidity; (5) water molecules are leaving the liquid at the same rate they are condensing from vapor into the liquid; the water won’t evaporate.

In general reversible processes occur while a system is in a state of equilibrium—no mechanical, chemical, electrical forces operating on it. Clearly this is not a usual state of affairs, so the reversible processes are essentially idealizations of what actually goes on in the real world. The gas piston moving up as fuel is ignited in the piston chamber is approximated as a reversible expansion and compression process.

Entropy: “the arrow of time”

With the concepts above in mind, we can now look at why entropy is called “the arrow of time.” When spontaneous/irreversible processes occur, they almost always occur with an increase of entropy. (6) Thus an increase in entropy is associated with “irreversible processes”: expansion of a gas into a vacuum, scrambling an egg, biological aging. Since irreversible processes occur from past to future, not the reverse (an egg doesn’t unscramble spontaneously, a glass of spilled milk doesn’t jump from the floor), an increase in entropy is a signpost for the direction in time for which events occur spontaneously. Thus, entropy is “the arrow of time.”

Now, physics is based on fundamental principles, one of which is the principle of microscopic reversibility. (This principle is equivalent to a time symmetry: physical laws hold just as well when the time parameter t is replaced by -t, the equations of physics look the same when you forward or backwards in time.) So there seems to be an anomaly: although not forbidden by fundamental physics, going backwards in time for entropic changes is not the same as going forward.

Moreover, in order to derive the Second Law from fundamental physics (via statistical mechanics), additional assumptions are needed. (7) (See here and here.) There is a fundamental asymmetry between future and past that has to be introduced into the physics of the derivation in order to arrive at the Second Law. One way of doing this is to set initial conditions for the beginning of the universe in a special way. (8)

Despite this tension between time’s mathematical symmetry in physics equations and entropy’s time arrow, entropy is a fundamental concept for all branches of science. Black holes have an event horizon, an area that defines the entropy of the black hole. Biopolymers—proteins, enzymes, nucleic acid chains—undergo structural changes governed by the contest, higher entropy versus lower energy.

What’s To Come

In Part IV, I’ll discuss how relativity, special and general, requires us to revise Newton’s principle that time is an absolute dimension of reality. We should note that even with this revision of how we view time, entropy and energy can still be formulated within a relativistic context.

Notes and References

1 I’ll assume the reader has a qualitative notion of the various forms of energy: energy of motion, heat, work, gravitational, electrical, chemical. See this video for a good definition of energy. Water at the top of Niagara Falls has potential energy by virtue of its height—the force of gravity will make the water fall to the bottom. Water at the bottom of Niagara Falls has kinetic energy: energy of motion. The kinetic energy at the bottom will approximately equal the potential energy at the top (a small amount of energy is lost as heat, evaporation of the water. See this video for a good picture of a falls). The kinetic energy of the falling water is converted to electrical energy at the Niagara Falls power station as the water moves the rotors of electrical generators.

2 The reason systems tend to go to states of lowest potential energy (or lowest energy) is that in these states the forces operating on the system are in equilibrium, which is to say there’s no net force operating on the system. The ball rolling to the bottom of the hill has no net mechanical force acting on it: at the bottom of the hill (flat), the force of gravity is balanced by the force of the ground pushing back on the ball.

3 We get the relation below for the change in entropy, ΔS, for some change of state:

ΔS = adding up (little bits of heat/T)

If the temperature stays constant (what's called an "isothermal process"), you can add up the little bits of heat separately to have Q, total heat transferred to the system (+Q) or from the system (-Q) to get

ΔS = Q/T or -Q/T (Q a positive number)

This formula only applies if the process by which the change occurs is “reversible.” If it’s irreversible, like popping a balloon, then you have

ΔS > Q/T or -Q/T

Thus no heat is transferred to the air in the balloon when it’s popped, since the process occurs so quickly, but you still have ΔS > 0 because of the expansion of the air.

4 See this video for a nice explanation of how entropy (disorder) increases when solid goes to liquid, liquid goes to gas.

5 “100% humidity” corresponds to the maximum concentration of water molecules in the vapor above the liquid at the given temperature. If you kept water at 100 degrees C (its normal boiling point) in a closed container with water vapor at 1 atm in the container, the amount of liquid water would remain constant even though individual water molecules are popping off the liquid surface and condensing back onto it.

6 If a system is isolated or if an irreversible process occurs quickly enough (e.g. popping a balloon) such that no heat is transferred to the system (Q=0) then the 2nd Law says ΔS > 0, whence entropy increases. Note that the universe is, by definition, an isolated system, so that ΔS for the universe>0 (the universe is not at equilibrium). Such an increase in entropy for the universe implies that it will cool down to some very low temperature in the very far future (“heat death”).

7 Ludwig Boltzmann explained entropy and thermodynamics in terms of the dynamics of molecules and probability considerations. He proposed this famous formula for entropy: S = k ln W (where S is entropy, k is the Boltzmann Constant, and W is the thermodynamic probability). W represents the number of different molecular arrangements that correspond to the same physical state. However, his attempt to derive the Second Law from statistical mechanics (the famous H-theorem) was not entirely adequate because of a hidden assumption about initial conditions.

8 Sir Roger Penrose, in his fine text, “The Road to Reality” (Chapter 27), has discussed this tension between time symmetry in the equations of physics and The Second Law. He proposes as one explanation special conditions at the beginning of the universe. Even though Penrose is not a theist, this hypothesis is consistent with the idea of a creating God.

Read Also:

What is Time? Part I—Philosophy